In the digital age, the boundary between human creativity and machine-generated content is becoming increasingly blurred. The rise of generative AI models like DALL-E, Stable Diffusion, and Midjourney has revolutionized the creative industry, offering unprecedented tools for artists, designers, and creators. However, this revolution comes with a significant cost: the potential exploitation of artists' work without their consent.

The advent of the Nightshade tool, developed by researchers at the University of Chicago, aims to address this growing concern. Nightshade is a cutting-edge technology that "poisons" images, introducing imperceptible changes that can disrupt the training processes of AI models. This advisory blog delves into the intricacies of the Nightshade tool, its functionality, potential impacts, and the broader implications for artists and the AI industry.

AI Training and Copyright Infringement

The Rise of Generative AI Models

Generative AI models have transformed the landscape of digital content creation. These models, trained on vast datasets of images, can generate new and unique visuals by learning patterns, styles, and elements from existing artwork. While this technology opens up new avenues for creativity, it also raises significant ethical and legal questions.

The Ethical Dilemma

One of the primary concerns is the unauthorized use of artists' work in training these AI models. Without proper consent, AI companies scrape images from the internet, incorporating them into their datasets.

This practice not only violates copyright laws but also undermines the creative efforts of artists who rely on their work for livelihood. The current opt-out mechanisms offered by AI companies are insufficient, as they place the burden on artists to protect their intellectual property.

Nightshade: A Revolutionary Tool for Artists

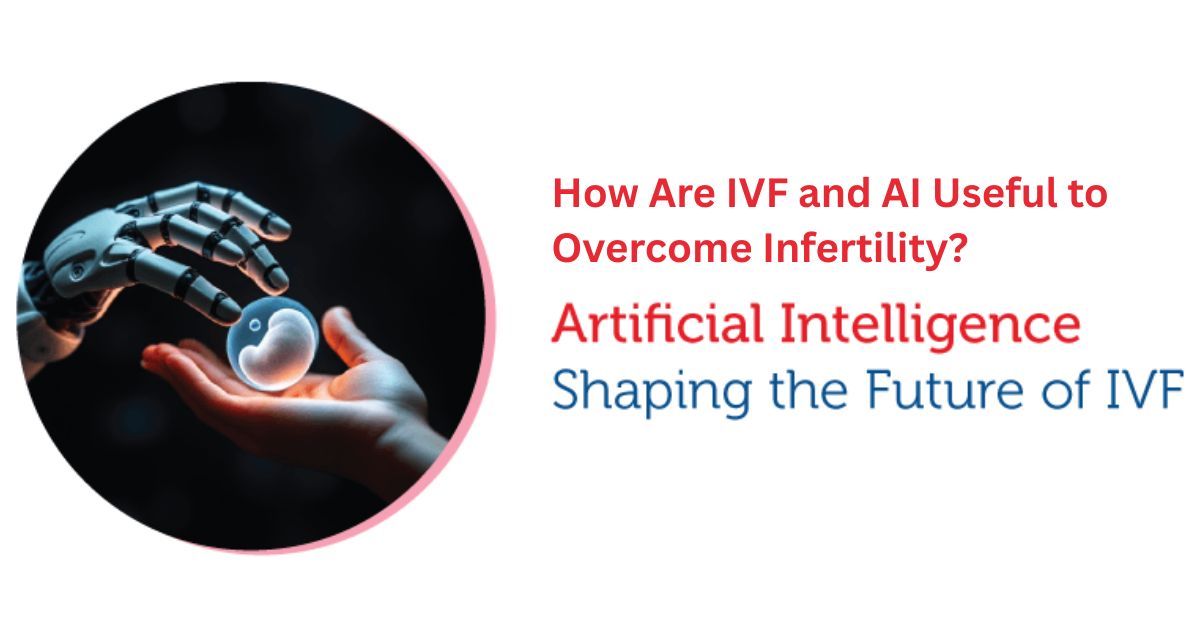

The Concept of "Poisoning" Images

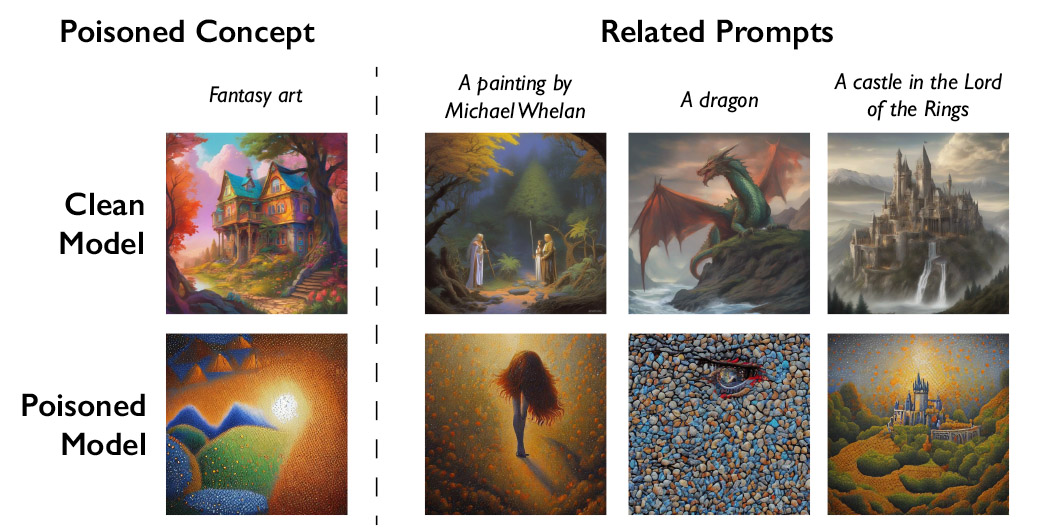

Nightshade introduces a novel approach to combating the unauthorized use of images in AI training. The tool "poisons" images by making small, invisible alterations to the pixels. These changes are imperceptible to the human eye but can have significant effects on AI models trained on these images. The result is that when the AI generates content based on the poisoned data, it produces unintended or anomalous outputs.

How Nightshade Works

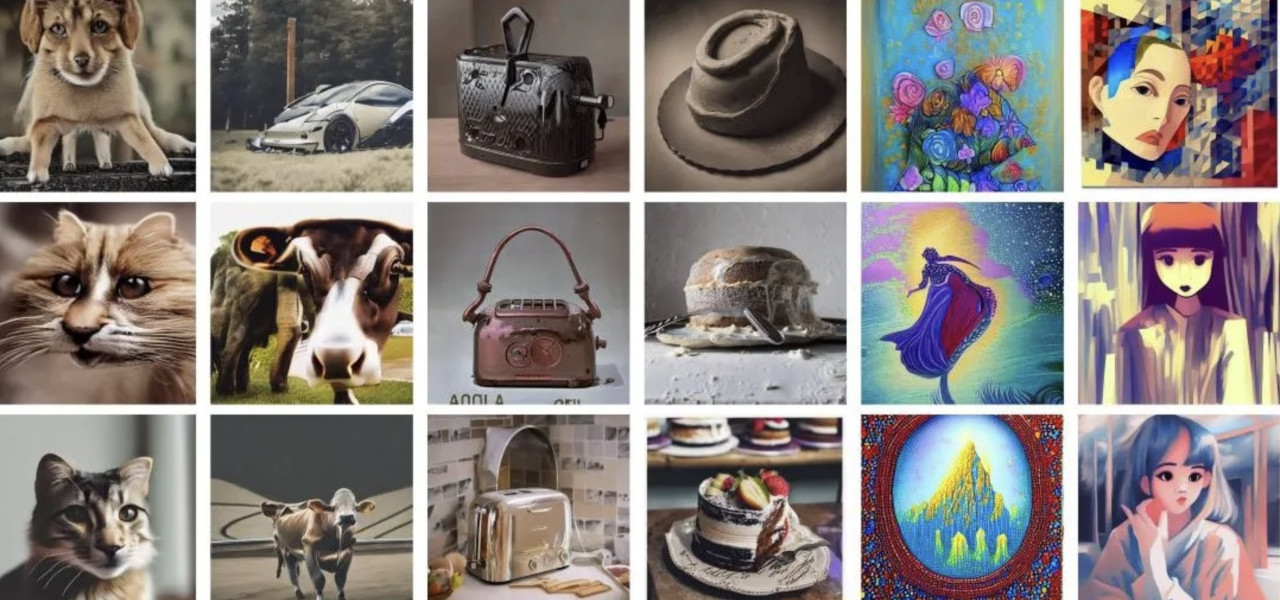

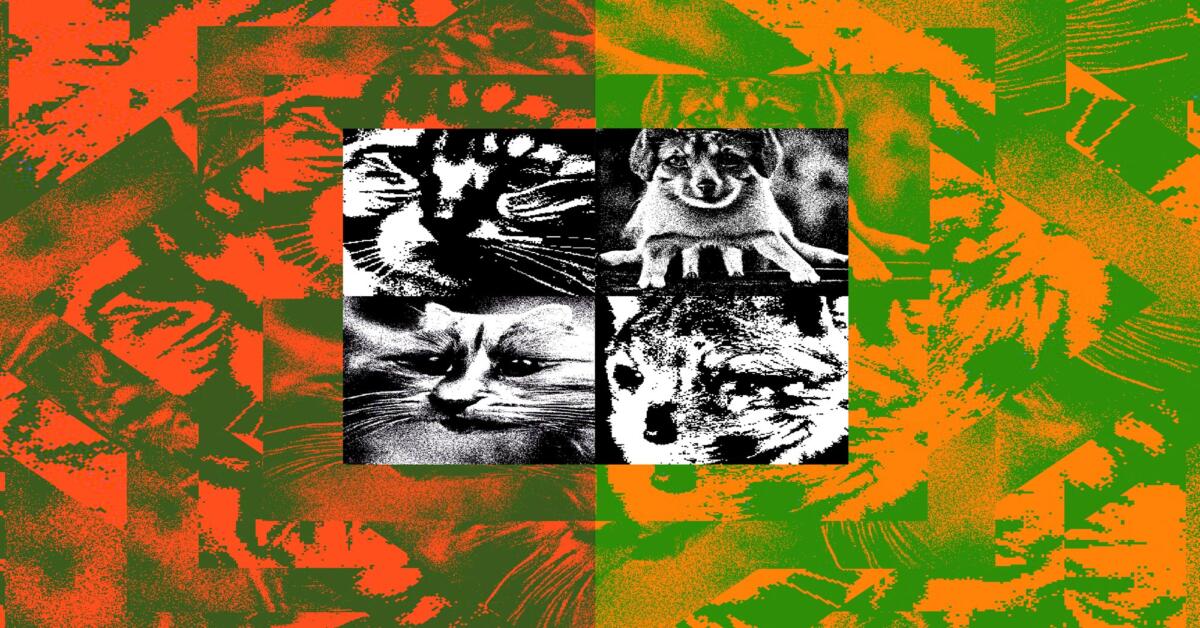

The process begins with the subtle modification of specific pixels within an image. These changes are carefully designed to interfere with the training algorithms of generative AI models. For example, an image of a dog might be altered in such a way that the AI model trained on this image later generates a cat instead. Similarly, an image of a car could be "poisoned" to cause the AI to produce a cow when prompted with a related term.

Impact on Artistic Styles

Nightshade's effects are not limited to object recognition. The tool can also disrupt the AI's ability to replicate specific artistic styles. For instance, an AI model trained on a poisoned image in the style of Cubism might produce outputs that resemble Anime or Impressionism instead. This capability highlights Nightshade's potential to safeguard the diversity of artistic expression by preventing AI models from co-opting and distorting artists' unique styles.

The Mechanisms Behind Nightshade's Effectiveness

The Poisoning Process: Technical Insights

Nightshade's efficacy lies in its ability to introduce "poison" into images without altering their visual integrity. The tool employs advanced algorithms that make minute changes at the pixel level. These modifications exploit the vulnerabilities in AI training processes, where even small discrepancies in data can lead to significant deviations in the generated output.

The "Bleed-Through" Effect

One of the most intriguing aspects of Nightshade is its "bleed-through" effect. This phenomenon occurs when the poisoning of a small number of images causes disruptions in AI-generated content beyond the directly affected images. For example, poisoning 50 images of dogs might not only cause the AI to generate cats instead of dogs but could also lead to anomalies in other related concepts, such as wolves or foxes. This bleed-through effect amplifies the impact of Nightshade, making it a potent tool against unauthorized AI training.

Limitations and Scope

While Nightshade is powerful, its impact on larger models with billions of training images is limited. To significantly disrupt these models, thousands of poisoned images would be required. However, for smaller datasets or specific use cases, Nightshade can be highly effective. The tool serves as a deterrent, signaling to AI companies that the unauthorized use of images comes with consequences.

Ethical and Legal Implications

The Ethical Justification for Nightshade

Nightshade raises important ethical questions about the use of technology in protecting intellectual property. On one hand, it offers artists a means to defend their work against unauthorized exploitation. On the other hand, the potential for malicious use of the tool cannot be ignored. Balancing these considerations is crucial in determining the ethical deployment of Nightshade.

Legal Considerations

The legal landscape surrounding the use of Nightshade is complex. While it provides a method for artists to safeguard their work, it also introduces new challenges in the enforcement of copyright laws. AI companies may argue that the poisoning of images constitutes interference with their business operations. However, the broader issue of unauthorized data scraping must be addressed to ensure that artists' rights are protected.

The Debate on Opting In vs. Opting Out

Currently, AI companies offer artists the option to opt out of having their work included in training datasets. However, this approach places the burden on artists, who must actively protect their intellectual property. Nightshade advocates for a shift towards an opt-in model, where artists have control over whether their work can be used in AI training. This approach would empower artists and ensure that their creative efforts are respected.

Potential Risks and Mitigations

Risks of Misuse

While Nightshade is designed to protect artists, it also poses risks if misused. Malicious actors could deploy the tool to sabotage AI models for nefarious purposes, such as disrupting legitimate AI applications or causing financial harm to companies. The potential for widespread misuse necessitates careful consideration of how the tool is distributed and regulated.

Strategies for Mitigating Risks

To mitigate the risks associated with Nightshade, several strategies can be implemented. These include limiting access to the tool to verified artists and organizations, incorporating safeguards to prevent malicious use, and developing legal frameworks that address the ethical implications of poisoning images. Collaboration between artists, legal experts, and AI developers is essential to ensure that Nightshade is used responsibly.

The Future of Artist Protection in the Age of AI

The Role of Technology in Protecting Creativity

As AI continues to evolve, so too must the tools and strategies used to protect artistic integrity. Nightshade represents a significant step forward in this effort, offering artists a means to reclaim control over their work. However, it is just one piece of a larger puzzle. The future of artist protection will likely involve a combination of technological, legal, and ethical approaches.

The Need for Collaborative Solutions

Addressing the challenges posed by generative AI models requires collaboration between multiple stakeholders. Artists, AI companies, legal experts, and policymakers must work together to develop solutions that balance innovation with the protection of intellectual property. Tools like Nightshade highlight the need for ongoing dialogue and cooperation in shaping the future of digital creativity.

The Vision for a Fairer AI Ecosystem

Ultimately, the goal is to create a fairer AI ecosystem where artists' rights are respected, and creativity is valued. Nightshade offers a glimpse into what this future could look like—a future where artists have the power to protect their work from unauthorized use and where AI development proceeds in a manner that is ethical and just.

Final Thoughts

The Nightshade tool represents a groundbreaking development in the fight to protect artists' intellectual property in the age of AI. By "poisoning" images, Nightshade disrupts the training of generative AI models, offering artists a powerful means to defend their work against unauthorized exploitation. However, the tool also raises important ethical, legal, and practical considerations that must be addressed to ensure its responsible use.

As we move forward into an increasingly AI-driven world, the need for robust artist protection mechanisms will only grow. Nightshade is a vital step in this direction, but it is not the end of the journey. Continued innovation, collaboration, and dialogue are essential to building an AI ecosystem that respects and values the contributions of artists, ensuring that creativity continues to thrive in the digital age.

.jpg)